Eye Tracking Technology

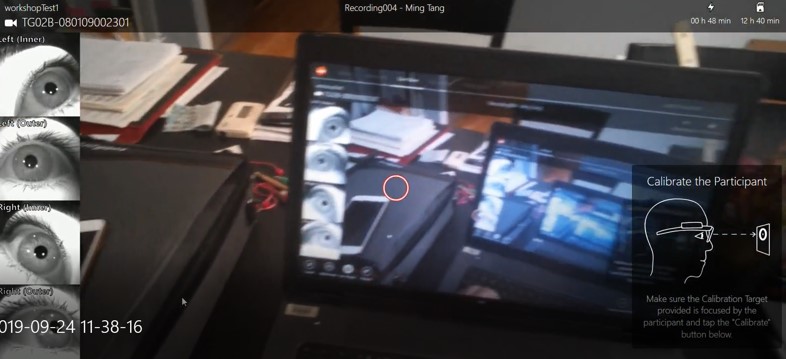

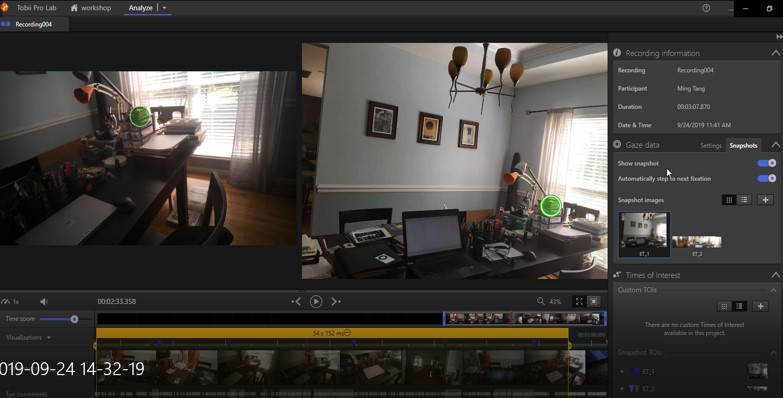

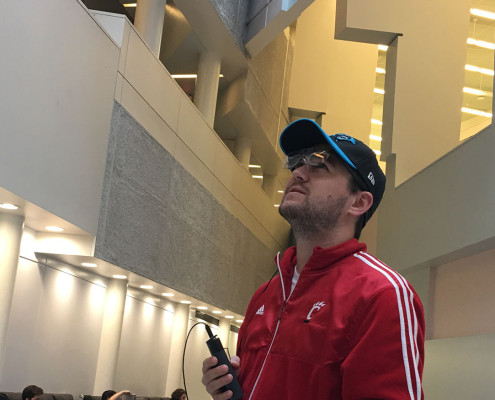

1. Wearable Eye-tracking technology.

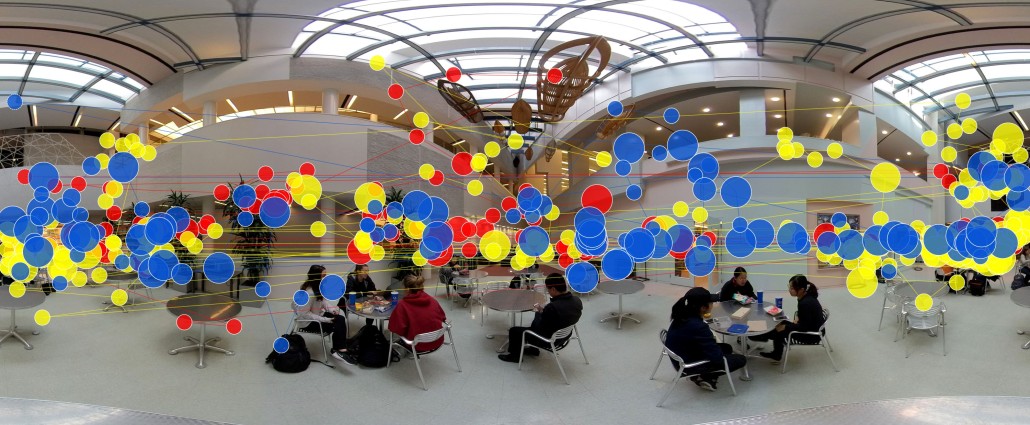

This wearable ET device includes various components, such as illuminators, cameras, and a data collection and processing unit for image detection, 3D eye model, and gaze mapping algorithms. Compared to the screen-based ET device, the most significant differences of the wearable ET device are its binocular coverage, a field of view (FOV) and head tilt that has an impact on the glasses-configured eye-tracker. Also, it avoids potential experimental bias resulting from the display size or pixel dimensions of the screen. Similar to the screen-based ET, the images captured by the wearable ET camera are used to identify the glints on the cornea and the pupil. This information together with a 3D eye model is then used to estimate the gaze vector and gaze point for each participant.

![]()

After standard ET calibration and verification procedure, participants were instructed to walk in a defined space while wearing the glasses. In this case, the TOI was set at 60 seconds, recording a defined start and end events with the visual occurrences over that period. In this case, data was collected for both pre-conscious (first three seconds) and conscious viewing (after 3 seconds).

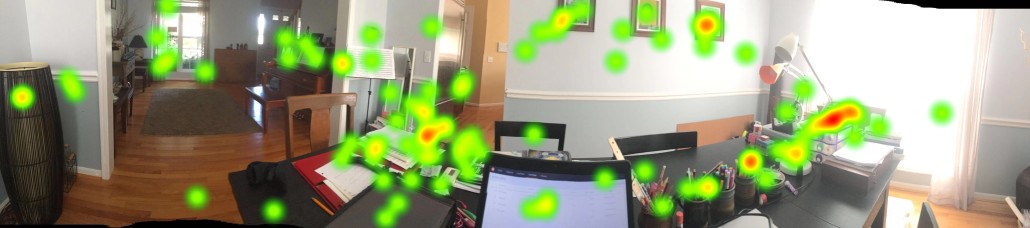

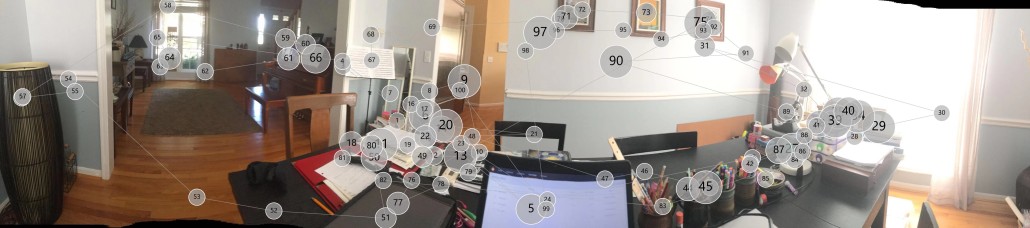

we also did the screen-based eye-tracking and compared the results.

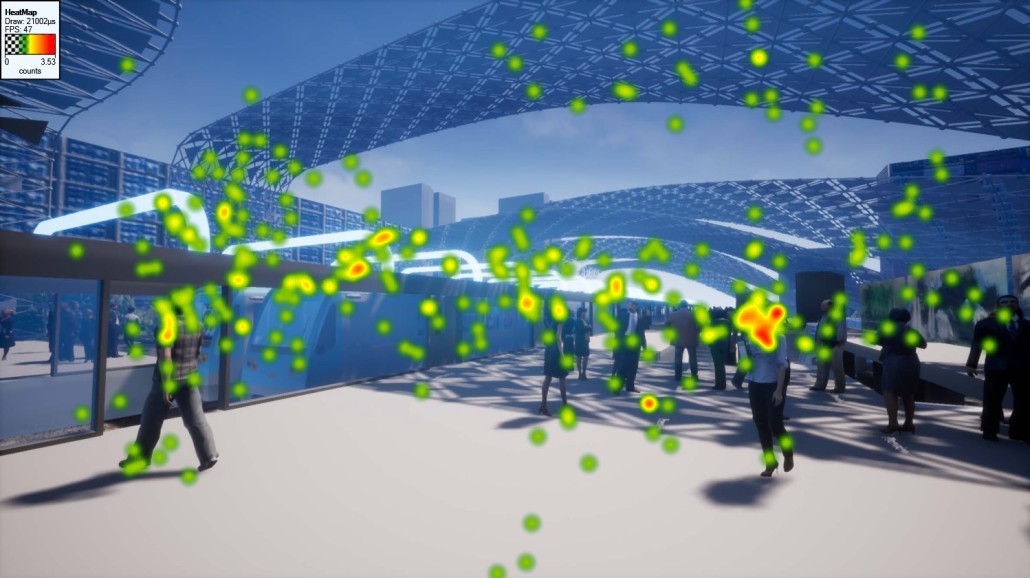

2. Screen-based Eye-tracking technology

This method was beneficial for informing reviewers how an existing place or a proposed design was performing in terms of user experience. Moreover, while the fundamental visual elements that attract human attention and trigger conscious viewing are well-established and sometimes incorporated into signage design and placement, signs face an additional challenge because they must compete for viewers’ visual attention in the context of the visual elements of the surrounding built and natural environments. As such, tools and methods are needed that can assist “contextually-sensitive” design and placement by assessing how signs in situ capture the attention of their intended viewers.

![]()

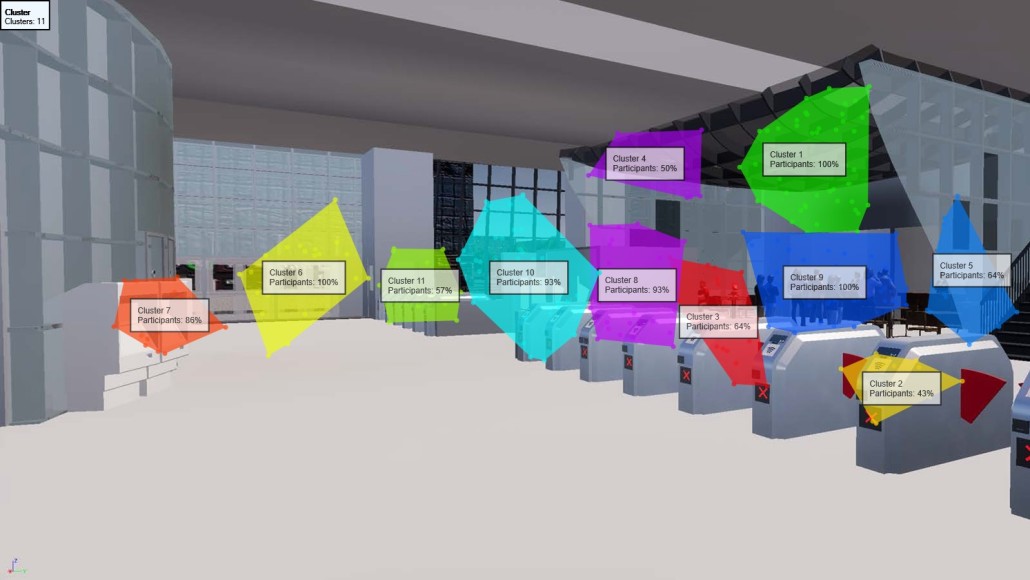

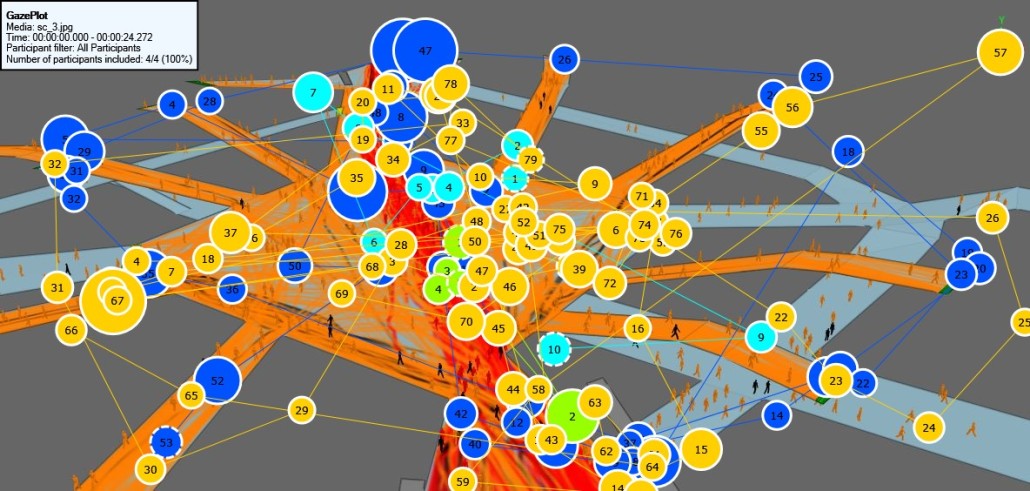

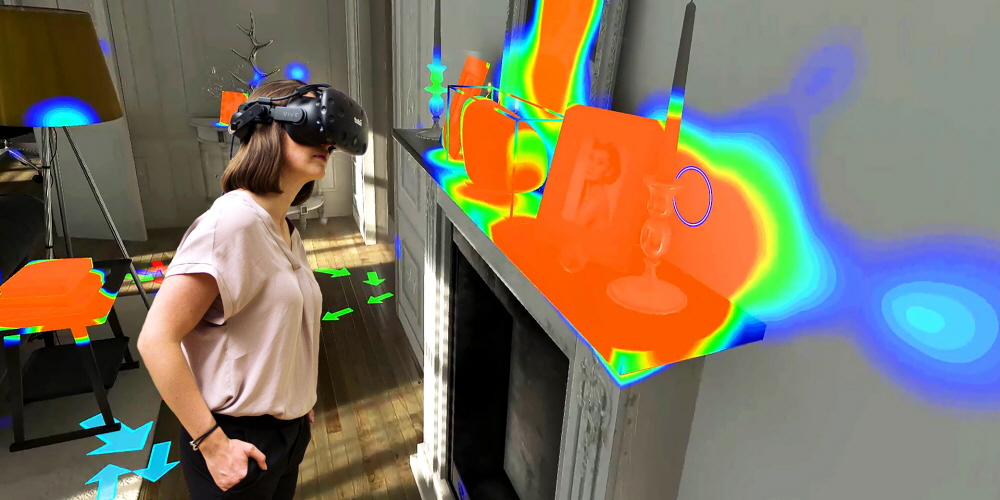

3. VR-Based Eye-tracking technology

Eye-tracking technology enables new forms of interactions in VR, with benefits to hardware manufacturers, software developers, end users and research professionals.

Paper:

Tang. M. Analysis of Signage using Eye-Tracking Technology. Interdisciplinary Journal of Signage and Wayfinding. 02. 2020.

Tang, M. and Auffrey, C. “Advanced Digital Tools for Updating Overcrowded Rail Stations: Using Eye Tracking, Virtual Reality, and Crowd Simulation to Support Design Decision-Making.” Urban Rail Transit, December 19, 2018.