call for enrollment: Metaverse development

Design in the Age of Metaverse and Extended Reality

ARCH 7036-004 (51920) / ARCH5051-004 (51921). Elective Arch Theory Seminar. Spring semester. DAAP, UC.

Class time: Tuesdays and Thursdays. 12:30 pm-1:50 pm

Location: Hybrid with online; CGC Lab @ DAAP; XR-Lab @ Digital Future Building.

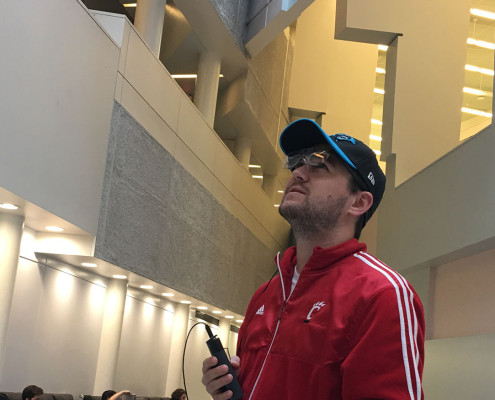

Instructor: Ming Tang. Director, Extended Reality Lab. XR-Lab, Associate Professor, SAID, DAAP, University of Cincinnati

The course is open to all UC students of any major. The course is open to both undergraduate (ARCH5051) and graduate students (ARCH 7036). Please contact Prof. Ming Tang if you have issues registering for the course as a student from another major.

- For SAID graduate student who has previously taken the VIZ-3 course, this advanced-level course will allow you to develop your XR-based presentations for thesis exhibition or propose your XR-related topics.

- For students with general design backgrounds ( architecture, interior design, urban planning, landscape, industrial design, art, game, film, and digital media), this course will allow you to extend your creativity into XR and create an immersive experience.

- For students without previous experience in design fields (engineering, art & science, CCM, medicine, etc.) this course will be an excellent introduction to the realm of XR.

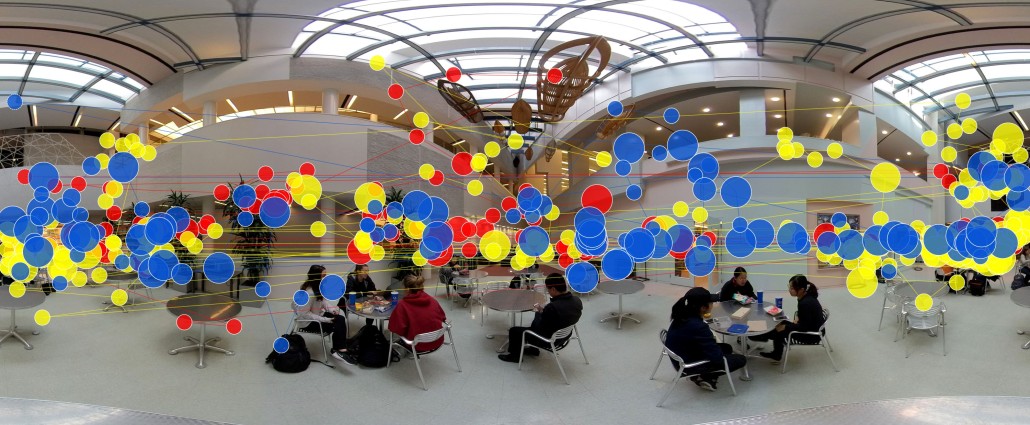

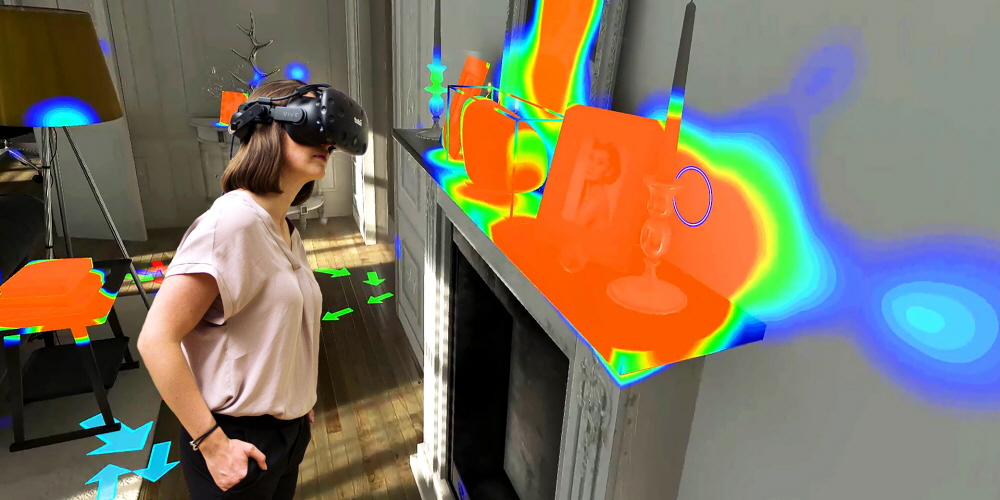

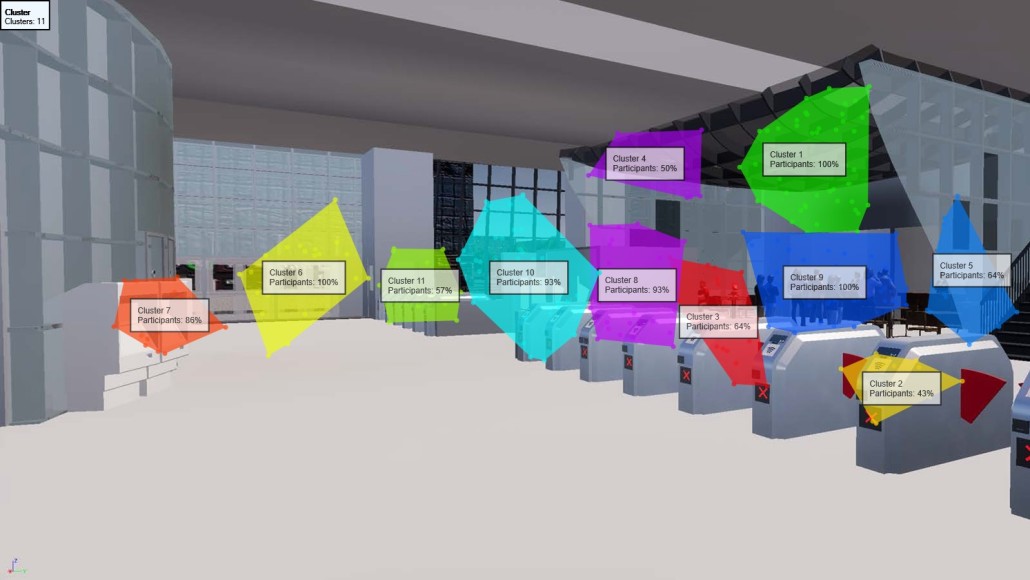

This seminar course focuses on the intersection of traditional design with immersive visualization technologies, including Virtual Reality, Augmented Reality, Digital Twin, and Metaverse. The class will explore the new spatial experience in the virtual realm and analyze human perceptions, interactions, and behavior in the virtual world. Students will learn both the theoretical framework and hands-on skills on XR development. The course will provide students exposure to the XR technologies, supported by the new XR-Lab at the UC digital future building. Students are encouraged to propose their own or group research on future design with XR.

Hardware: VR headsets and glasses are available at the XR-Lab at DF.

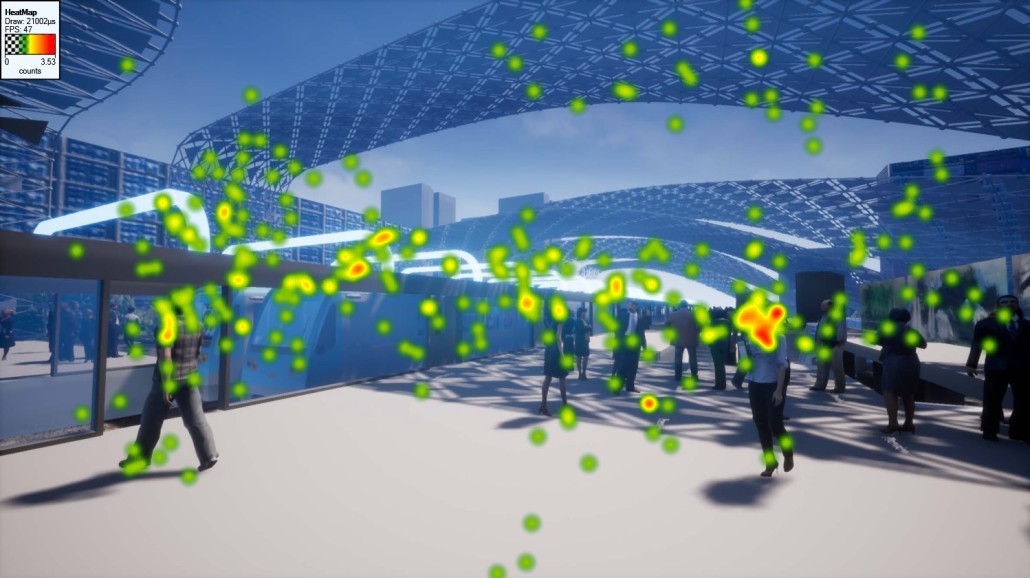

Software: Unreal Engine 5. . available at DAAP CGC Lab.

Course Goals and Outcome

Students are encouraged to look at architecture, motion pictures, game design, 3D design, and other digital art media. As students develop their research, these fields become invaluable in inspiration and motivation. The instructor supports all attempts by students to experiment with techniques that interest and challenge them above and beyond what is taught in the classroom.

Students completing this course will be able to identify theories and research methods related to eXtended Reality (XR), including (1) History and theory related to XR (VR+AR+MR); (2) Emerging trend related to the Metaverse and its impact on design.

The course focus on hands-on skills in XR development through Unreal Engine 5. Students will propose their XR projects and develop them over the semester.

This course will be offered as a hybrid of online and in-person at CGC/Digital Future building based on the project’s progress. The student will propose their projects and use 15 weeks to complete the development.

Prerequisite: There are no skills needed as prerequisites. However, Basic 3D modeling skills are preferred.

Schedule for each week.

- History and the workflow of metaverse development

- project management at GitHub (Inspiration from the film due)

- Modeling ( XR vignette proposal due)

- Material & Lighting (Project development start)

- Blue Print, node-based programming

- Character and Animation

- Interaction and UX @ deploy to XR

- Project development time

- Project development time

- Project development time

- Project development time

- Project development time

- Project development time

- Project development time

- Project development time

- End-of-semester gallery show at DF building

Examples of student projects on XR development

- Future Retail

- Burning man

- Seminar: XR

- Driving simulation

- Bus stop design in Metaverse

- Price Hill

- rural-mobile-living

- Future uptown Cincinnati

- Train station in Beijing

- Transitehub at Cincinnati

- Thesis: Layered space.

- Thesis: Cyber-Physical Experiences: Architecture as Interface

- Thesis: Virtually Interactive DAAP

Recommended podcast on Metaverse